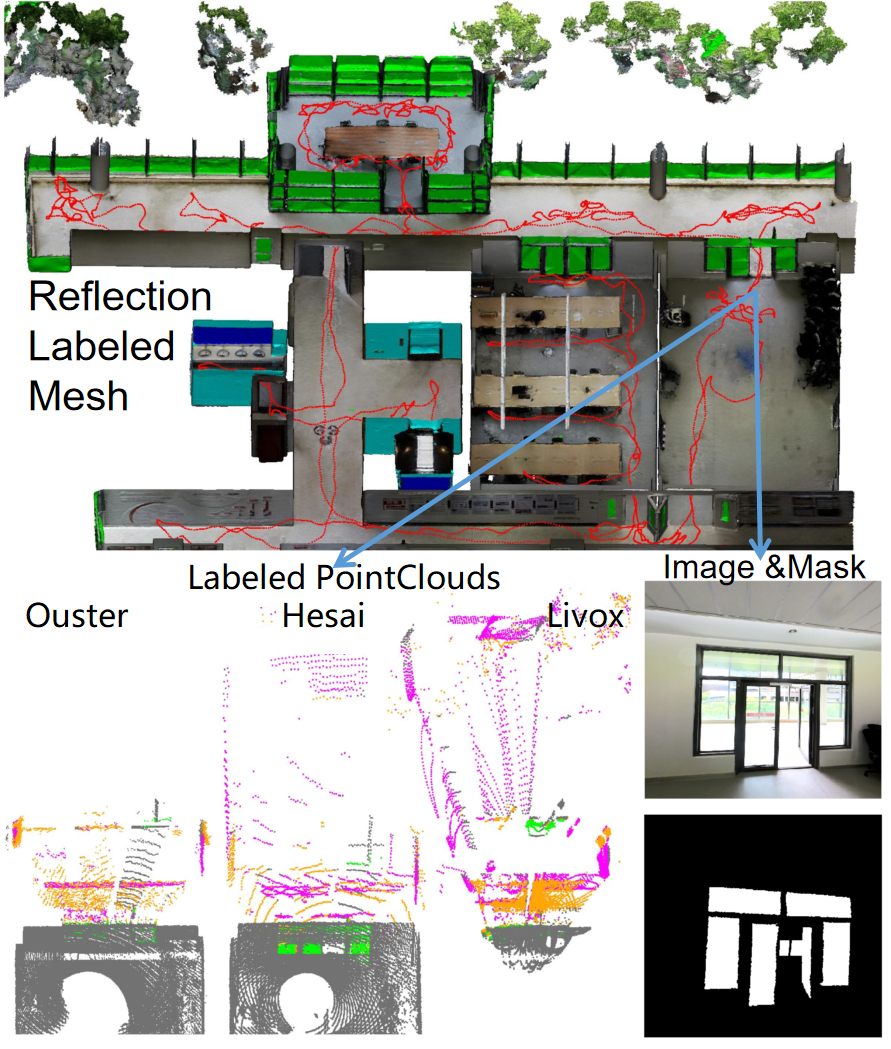

Dataset Overview

The 3DRef dataset contains three sequences captured in diverse indoor environments with various reflective surfaces like glass, mirrors, whiteboards, and monitors. It provides:

- 48,024 labeled Lidar point clouds from 3 sensors (Ouster, Livox, Hesai)

- 3,799 labeled RGB images

- Precise global alignment of all data

- Labeled ground truth 3D meshes enabling automatic point cloud annotation